이 글은 필자가 David Silver의 Reinforcement Learning 강좌를 듣고 정리한 글입니다.

The last lecture was about Planning by Dynamic Programming, which solves a known MDP. Now we are going to check how can we solve an unknown MDP (i.e. Model-free RL). To solve an unknown MDP we have to (1) Estimate the value function of an unknown MDP. We usually call this Model-free prediction(Policy evaluation). After that, we will (2) Optimize the value function of an unknown MDP which we call it Model-Free Control. In this lecture, we are going to learn about the Model-free Prediction methodology, which consists of Monte-Carlo and Temporal-Difference.

We have to focus on how Monte-Carlo and Temporal-Difference methodology estimate the value function vπ of an unknown MDP(Then what consists of unknown MDP? How can we get the G_t? "No knowledge of MDP transitions/rewards").

- Goal: learn vπ(=Eπ[Gt|St=s]) from episodes of experience under policy π

- S1,A1,R2,⋯,Sk∼π

🌳 Monte-Carlo Policy Evaluation

- MC methods

- learn directly from complete episodes of experience. On Monte-Policy Evaluation, it uses empirical mean return instead of expected return.

- Caveat: can only apply MC to episodic MDPs

- All episodes must terminate

- Monte-Carlo Policy Evaluation

- To evaluate state s, they update state s is visited in an episode from the first time-step or every time-step. (Temporal Difference = Incremental every-visit Monte-Carlo)

- Plain Update: Value is estimated by mean return V(s)=S(s)N(s). By law of large numbers, V(s)→vπ(s) as N(s)→∞

- Increment counter N(s)←N(s)+1

- Increment total return S(s)←S(s)+Gt

- Incremental Monte-Carlo Updates: Update V(s) incrementally after episode S1,A1,R2,⋯,ST. For each state St with return Gt

- Increment counter N(St)←N(St)+1

- Increment total return V(St)←V(St)+1N(St)(Gt−V(St)). (If non-stationary problem, it can be useful to track a running mean, i.e. forget old episodes: V(St)←V(St)+α(Gt−V(St))

- MC converges to solution with minimum mean-squared error

🌳 Temporal-Difference Policy evaluation

- TD methods

- TD learns directly from incomplete episodes, by bootstrapping. learn vπ online from experience under policy π.

- Temporal-Difference Policy Evaluation

- To evaluate state s, they update state s is visited in an episode from every time-step: Incremental every-visit Monte-Carlo.

- How to Update: Update V(St) toward estimated reward Rt+1+γV(St+1): V(St)⟵V(St)+α(Rt+1+γV(St+1)−V(St))

- TD error: δ=Rt+1+γV(St+1)−V(St)

- TD target: Rt+1+γV(St+1)

- TD target is biased estimate of vπ(St).

- True (Unbiased) TD target is Rt+1+γvπ(St+1)

- Unbiased estimate (pi?전체 episode에 대한 정보를 바탕으로 Update 해야 하는데 한 스텝에서만의 대한 처리라서 그런가? 근데 왜 policy가 여기서 나오지? episode 아닌가?. TD(0) converges to vπ(s)는 무슨 뜻인가.$)of vπ(St) is Return: Gt=Rt+1+γRt+2+⋯+γT−1RT

- TD target is much lower variance than the return. Because return depends on many random actions, transitions, rewards. TD target depends on one random action, transition, reward.

- TD(0) converges to solution of max likelihood Markov model

🌳 MC vs. TD

| Temporal-Difference | Monte-Carlo |

| learn before knowing the final outcome (online learning) |

wait until end of episode before return is known. |

| V(St)←V(St)+α(Rt+1+γV(St+1)−V(St)) | V(St)←V(St)+α(Gt−V(St)) |

| learn without the final outcome / works in continuing (non-terminating) environments. |

only works for episodic (terminating) environments. |

| Low variance, Some bias | High variance, Zero bias |

| Usually more efficient than MC | Good convergence properties |

| More sensitive to initial value | Not very sensitive to initial value |

| Exploits Markov property / Usually more efficient in Markov environment |

Do not exploit Markov property / Usually more effective in non-Markov environments. |

| Bootstraps | No bootstrap |

| Samples | Samples |

- Dynamic Programming V(St)←Eπ[Rt+1+γV(St+1)]

- Bootstraps, No sample

- Unified View of Reinforcement Learning

🌳 Deeper on Temporal-Difference

- n-step TD

- n-step return G(n)t=Rt+1+γRt+2+⋯γn−1Rt+n+γnV(St+n)

- The λ-return Gλt combines all n-step returns G(n)t using decay λ: Gλt=(1−λ)∑∞n=1λn−1G(n)t

- n-step TD learning V(St)←V(St)+α(G(n)t−V(St))

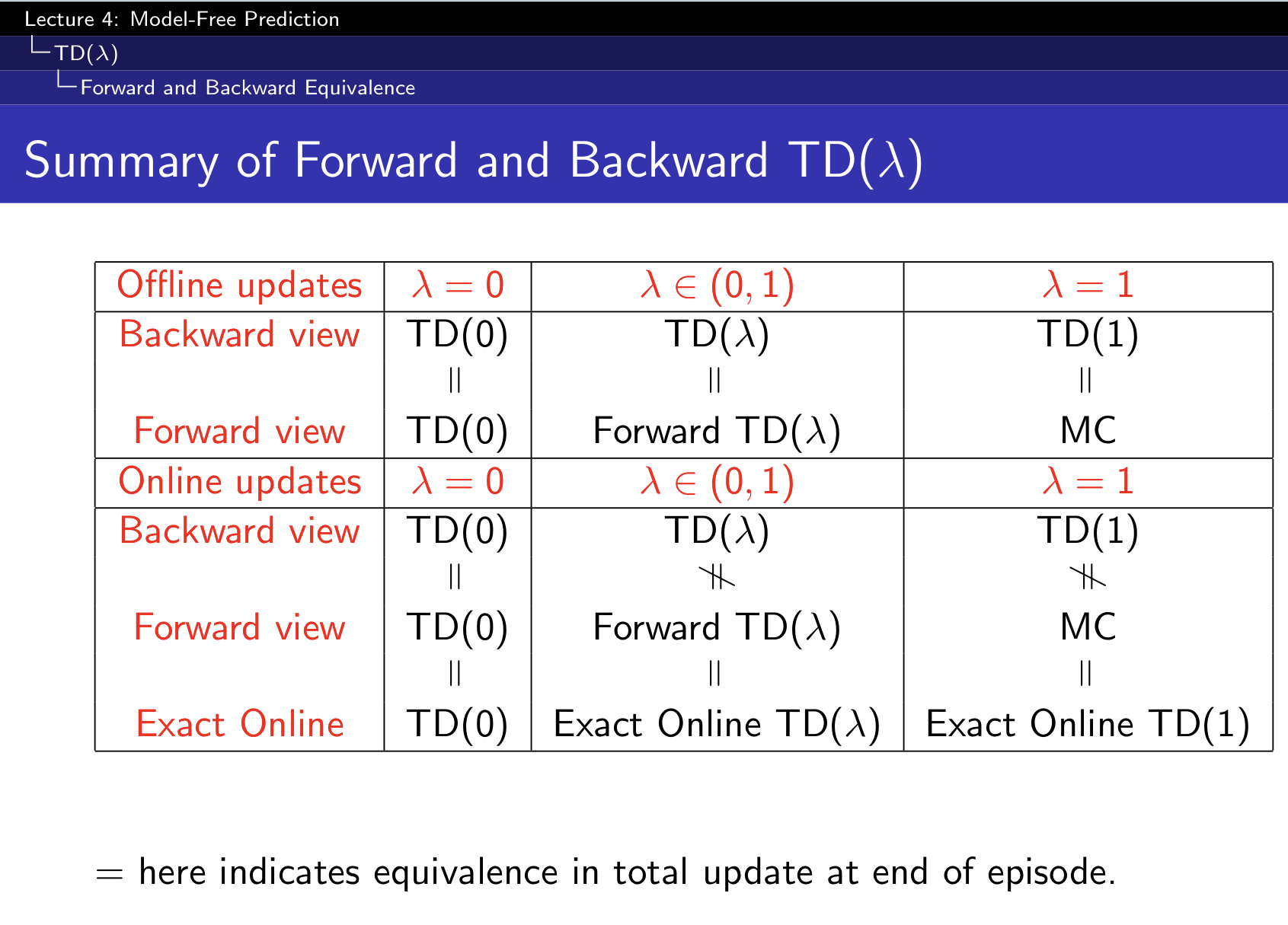

- Forward view of TD(λ) V(St)←V(St)+λ(Gλt−V(St))

- Update value function V(s) towards the λ-return

- Foward-view looks into the future to compute Gλt

- if λ=0, only current state is updated

- when λ=1, credit is deferred until end of episodes (Equivalent to every-visit Monte-Carlo)

- Like MC, can only be computed from complete episodes

- Backward view of TD(λ) V(s)←V(s)+αδtEt(s) (TD-error \delatt)

- Update value function V(s) for online, every step s, from incomplete sequence

- Keep an eligibility trace for every state s

- Frequency heuristic: assign credit to most frequent states

- Recency heuristic: assign credit to most recent states

- Sum

![[David Silver] 4. Model-Free Prediction: Monte-Carlo, Temporal-Difference](https://img1.daumcdn.net/thumb/R750x0/?scode=mtistory2&fname=https%3A%2F%2Fblog.kakaocdn.net%2Fdn%2FEtEse%2FbtryQSnIA8O%2FYHuMwxJRK0EmnnzP5kDI91%2Fimg.png)